How we used the cloud to supercharge remote development

The Rippling application is a Python monolith. Our repository has 1.91M lines of code and 8.6K Python files with 200+ developers contributing and merging 100+ PRs every day.

When I joined the development team, the application was already massive enough to put significant strain on our standard-issue development machines. It required considerable memory and CPU effort to simply run. Each time we’d open PyCharm (our preferred IDE for development) and bring our entire stack up, the laptop’s resources were pushed to the limits. Add a Zoom session to the mix and the dreaded kernel_task would kick in and our laptops would become unusable.

We needed to find a solution to our hardware resource challenges, one that played nice with a mostly remote development team and, most importantly, one that could handle a dynamic enterprise-scale Python repository. Here’s what we came up with.

Working with remote servers

From the start, we had a hunch that the solution to our hardware resourcing issues lay in the cloud. In June 2021, we started experimenting with offloading our application, and much of the compute load, to remote servers.

Our primary consideration was to retain developers' existing workflow of using PyCharm to develop code, run tests, access the backend server, debug issues, and use known keyboard shortcuts. Due to this, we rejected all browser-based cloud development solutions such as AWS Cloud9 and Codespaces.

We wanted the UX of local IDE with the resource power and scalability of the cloud. To meet these requirements, we chose to use EC2 servers hosted within our AWS account for the compute power while retaining PyCharm as the IDE.

In this approach:

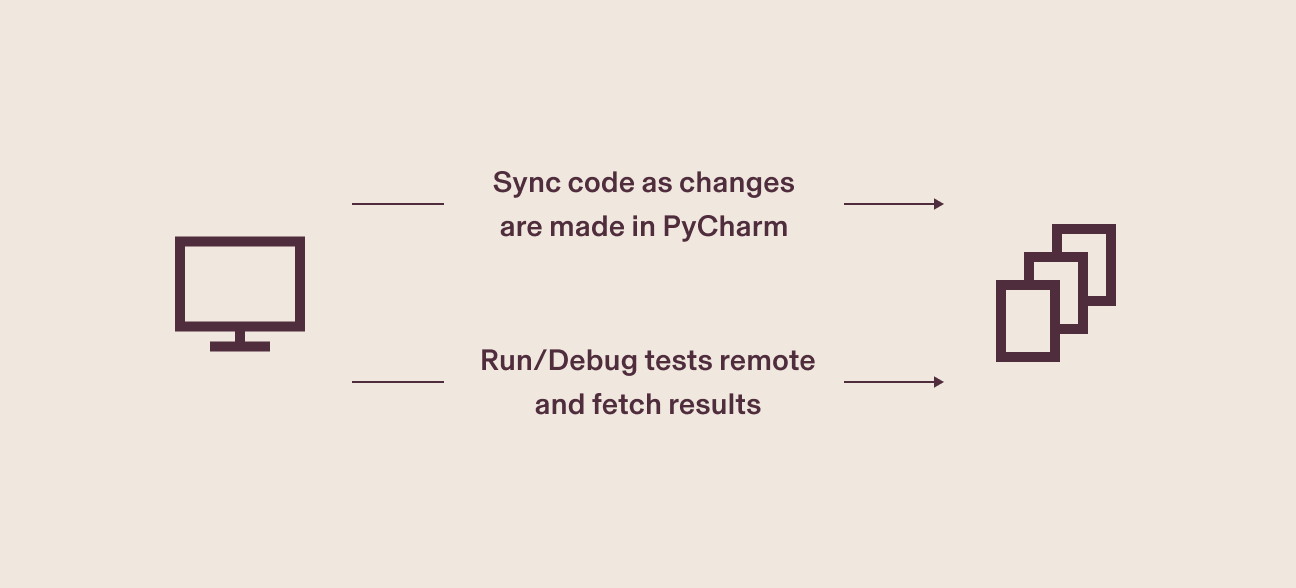

- Developers continue to write code in PyCharm

- As they write code, we sync it with the remote EC2 servers

- Developers use their usual shortcuts to run tests and debug the application

- Execution occurs on the EC2 servers with seamless integration to PyCharm’s output console

The sync & testing challenge

Our remote-first approach had many advantages over local machines, but we needed to solve two crucial requirements before implementing it company wide.

- The sync must be seamless and transparent with low latency. Developers should not wait for their changes to propagate every time they write code.

- Retain the use of PyCharm as a built-in way of running/debugging tests. Developers can continue to use the same keyboard shortcuts, with minimal workflow disruption.

First, we tried PyCharm’s remote deployment as it uses sftp to copy modified files. But the solution was not robust enough — whenever we switched branches or pulled new changes using git in the terminal, PyCharm could not detect all the added, modified, and removed files and sync accordingly. It was tremendously inconsistent.

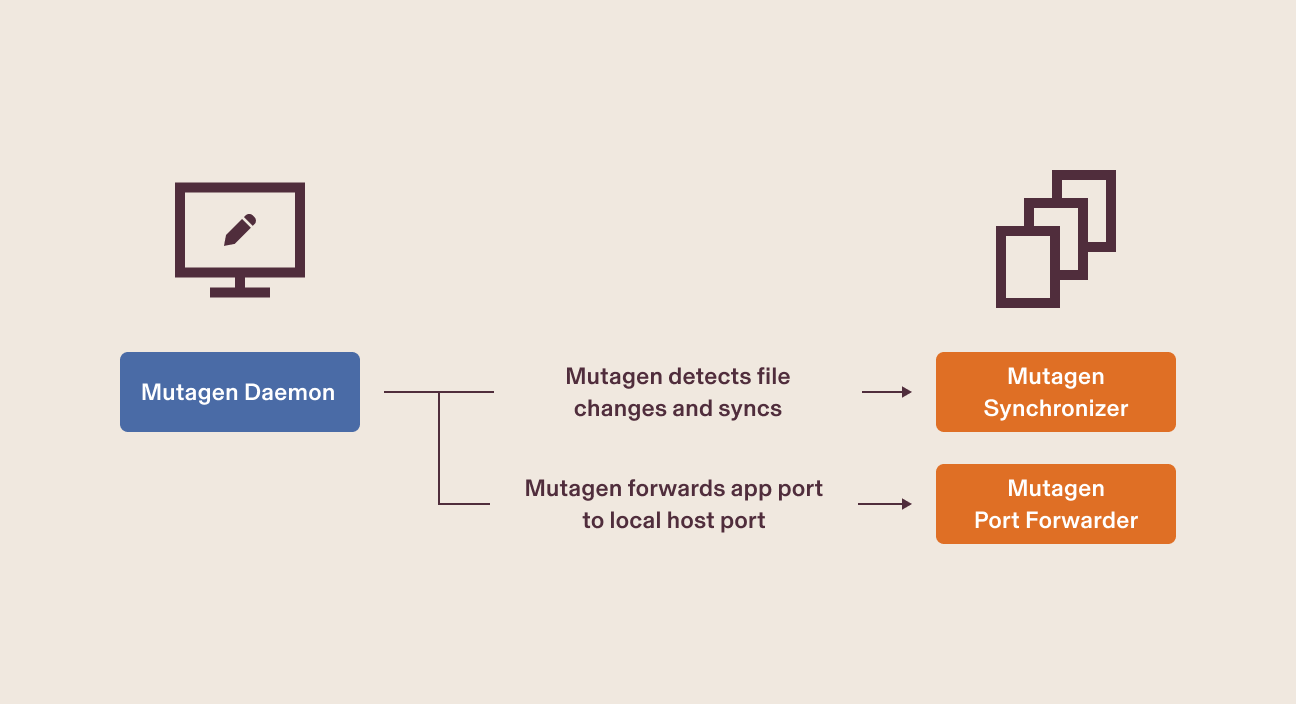

In an attempt to solve for PyCharm’s inconsistencies, we turned to Mutagen, a robust open-source file synchronization tool with the ability to also forward ports. Essentially, it is a modern-day Rsync and SSH port forwarder that can be configured using a version-controlled configuration file.

Mutagen uses SSH protocol to sync with the remote instance. It runs an agent on both sides of the transmission to efficiently deduce what changes need to be shipped. To establish the SSH session, we used AWS SSM by configuring the SSH client to proxy the command through AWS CLI, thus accessing our instances without exposing those EC2 servers publicly. Once Mutagen established the SSH session, it started syncing code and forwarding necessary ports.

As Mutagen also handled port forwarding, developers were able to access the application through the same port (localhost:8000) as they had in the past.

Running our application and tests via PyCharm

PyCharm’s remote deployment allowed us to use the remote instance’s Python interpreter to run Pytest. We were able to provision the instance using Terraform to have our application set up with all the necessary requirements like system libraries and Python requirements. However, this made maintaining automated instance setup and system library updates a challenge. We had to either recreate instances or run scripts on all the instances.

Docker to the rescue

PyCharm supports using a Docker image’s Python as an interpreter. We already had a local setup where developers could run the application using their local Docker. This has already provided significant benefits by keeping our local developer environment as close to production as possible.

We were able to use PyCharm’s remote Docker interpreter by connecting it to the EC2 instance’s Docker daemon listening over TCP through VPN. All tests ran through PyCharm seamlessly on the EC2 instance and we got the test results back inside PyCharm. The debugger works the same way. This meant there were no additional tools the user needed to use to develop.

Bringing it all together

To unite our new cloud development environment, we wrote a few scripts that would:

- Log in to AWS

- Identify the instance for the developer

- Start the instance

- Start the sync using Mutagen along with port forwarding

To trigger the scripts, a few PyCharm configuration files came in handy. Users run a PyCharm configuration to start the sync or the backend server or start/shut down the instance.

Users can start the sync through a PyCharm task

This would run the corresponding scripts inside PyCharm’s terminal.

All the necessary actions are provided as PyCharm run configurations.

Finally, we used Terraform automation to set up the EC2 instances with Docker daemon installed. Developers can manage all their dependencies and services through Docker. We persist Docker images, containers, and volumes on a separate disk. This makes the underlying EC2 instances ephemeral. In the end, developers raise a pull request and get approval to set up their cloud development environment, and start contributing in a matter of minutes.

The benefits

1. Standardized setup for everyone

No cases of “works on my machine.” Our code runs the same way from development to production. As we had adopted the Dockerized setup with ephemeral instances, the runtime environment was the same for every developer.

2. Provisioned instances based on the developer's needs

We were able to use bigger CPU-optimized instances for developers in a team that worked on CPU-heavy operations. As we had “detailed monitoring” enabled, choosing the right size was metrics-driven and a simple configuration change.

3. Faster app and test-running times.

We saw a boost of 200% to 300% in the tests that were running. The EC2 instances were dedicated to running only the application and tests with native Docker support.

4. Accelerated developer onboarding

We saw a significant reduction in the time it took to set up the development environment for new hires—from two days to two hours.

Rippling is hiring!

As a member of the infra team, you’ll join a close-knit group of 10 as we help more than 200 engineers in India and the US run faster and safer. We’re obsessed with quality engineering and deep technical work. Even though we’ve got chops from some of the biggest tech companies on the planet, we’re always learning. No egos here.

We’re looking for Pythonistas to build frameworks, optimize, and find innovative-yet-simple solutions to growing problems that arise as Rippling scales multifold. You’ll guide other engineers, help them debug, understand their true needs, and help build industry-leading solutions.

An interest in open source is a plus as most of the code you’ll write will be business-agnostic.

Some solutions will demand knowledge across the entire stack i.e. how the code is scaled, deployed, and monitored. So knowledge of containers, deployment, instrumentation, etc. will make you more effective. Not a problem if you don't have exposure, as long as you are willing to learn.