10 Lessons: Containerizing our Django Backend

When we started Rippling four years ago, we had our servers in production provisioned with Chef cookbooks. It was simple. It worked. As we grew, however, that simple model no longer served all of our needs. High availability deployment, library changes and monitoring required more effort and coordination to achieve.

Containers, of course, are the solution to this challenge. We recently migrated our infrastructure over to that model. We learned some good lessons along the way that we’d like to share.

A little history

Before diving into the details, let me give you an overview of our stack.

Our backend is a python-based HTTP API written using the Django framework. It uses MongoDB as a data store. Our backend exposes ~8000 API endpoints and processes on average 32M requests per day. We have a home-grown framework for running background jobs. We use uWSGI + nginx as an app and web servers. Before our migration, we were using AWS OpsWorks to deploy our services.

We started this journey by creating a Dockerfile and getting it running in local. To fast track, we mimicked the setup of tools and configuration in Chef cookbooks (e.g. uWSGI + nginx, nginx config, OS version, packages).

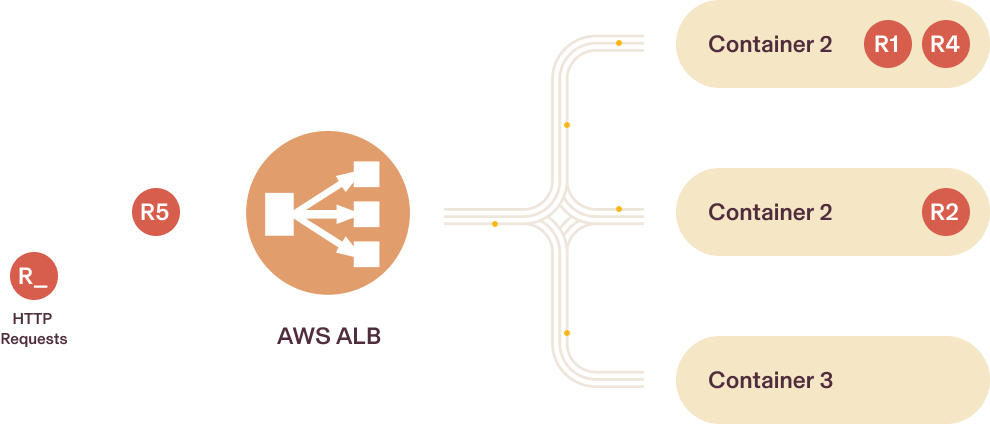

We decided to use AWS ECS to run our containers because it was simpler and the team had prior experience with that. We built the ECS stack and started with 5% of traffic being routed to the new stack. We completed this migration in a phased manner. This is how our new stack looks at a high level:

Lessons learned

Lesson 1: Run uWSGI and nginx together in containers

The official Docker documentation recommends running one process per container. Our initial plan was to run a uWSGI process in the container in HTTP mode and directly receive traffic from AWS ALB. However, it turned out to be unstable and occasionally it threw 502 errors. When uWSGI processes restarted the requests sent by AWS ALB started returning 502 errors. We discovered that this behaviour was observed by others as well (ref). So we started running uWSGI and nginx inside the container with nginx in the foreground.

Lesson 2: Avoid spawning empty shells by enabling the need-app option in uWSGI

uWSGI is a great app server for python applications but the default config misses important things. One is the need-app option. This option prevents uWSGI from starting if it is unable to load the application. Without this option enabled, uWSGI will ignore errors (syntax, import errors, memory issues) thrown at startup and will start an empty shell that will return 500 for all requests.

Lesson 3: Ensure graceful nginx shutdown

When ECS stops a container, the equivalent of docker stop command is issued to the container. (If you are curious, you can look at the aws-ecs-agent code.) It sends a stop signal which is SIGTERM by default. If it does not stop, then ECS issues SIGKILL to forcefully kill the container.

Our containers run nginx as the foreground process. When nginx receives SIGTERM, it executes a “fast shutdown” - any open connections of in-flight requests are immediately closed. This leads to ALB responding to those requests with 502 status.

The recommended way is to do graceful shutdown - it completes processing the in-flight requests before shutting down. Nginx expects a SIGQUIT signal for it to execute graceful shutdown. To override the stop signal to SIGQUIT, we have added `STOPSIGNAL SIGQUIT` in the Dockerfile.

Lesson 4: Use AWS secrets manager for versioned config

To inject environment variables into ECS tasks, we could use a parameter store, secrets manager, or directly set the variables in the task definition.

- When directly specified in the task definition, the secrets get duplicated across multiple task definitions. That’s bad practice and also a security risk.

- ECS supports specifying the parameter store values with valueFrom attributes inside the task definition environment. However, there is no versioning support. This can lead to inconsistency during scaling actions or reverts. For example, during scale out the new tasks will refer to the current value of the parameter instead of the value during which the task definition was created.

- Moving to AWS secrets manager with versioned secrets specified in the task definition solved this problem. The secrets live only in the secrets manager and are injected by ECS when a container starts.

Lesson 5: Queue deploy time tasks during container bootup

When we release code to production, we have to run data migrations, email provider template updates, and the like with the new version of code. These tasks have to be run once just after the deployment. ECS does not have built-in support for this. We came up with two options:

- We could create a task definition to run the deployment time command. We would run that with the ECS RunTask command and then start the service. We did not go with this as we would have had to orchestrate outside the container orchestrator system. Error handling and rollback would have been harder to implement.

- Instead, we queued these tasks when the container started using our background job processing framework. Our framework ensures it runs only once even if the task is queued multiple times. Also, these tasks are idempotent.

There was one gotcha. We needed to ensure the tasks run only in containers that execute the updated code. Because our deployment is blue-green, there’s a brief period in which the old containers would also be running. So, we injected the git commit unix timestamp into the container and specified in the tasks the minimum value. This ensures that only new containers run the queued deploy time tasks.

Lesson 6: Be careful with the COPY command

We use a multi-stage docker build to build chocolatey in a source image and then copy the generated artifacts into our main target docker image. To copy all the necessary libraries from the source image, we did a

COPY --from=source /usr/lib/ /usr/lib/

By default, the COPY command recursively copies, retaining all the original files in the target image and copying all additional files from the source image. For conflicting filenames, however, the source overwrites the target.

As an example, a system shared library libxxx.so.6 was symlinked to different versions of the library in the source and target image. This caused an unexpected upgrade of the library causing a runtime failure of the application. Explicitly copying only the necessary system libraries avoided these side effects. We replaced the above command with:

COPY --from=source /usr/lib/mono /usr/lib/mono

COPY --from=source /usr/lib/libmono* /usr/lib/

Lesson 7: Use a container level health check for background jobs

When we deploy new code to the API containers, the ALB does an HTTP health check to decide when a container is able to receive traffic. Only after the new container is healthy and stable does it stop the old container.

We did not have a similar setup for background runners. Once, we had bad code deployed to background job runners causing them to crash shortly after they started. ECS considered the new containers to have started successfully so it stopped the old containers. This caused an infinite cycle of tasks starting and stopping.

To solve this problem, we used ECS container level health checks to ensure the container can connect to the queue and can pop jobs to process. When the container starts, it connects to the queue and if it is successful, it creates a file. The healthcheck just stats the file.

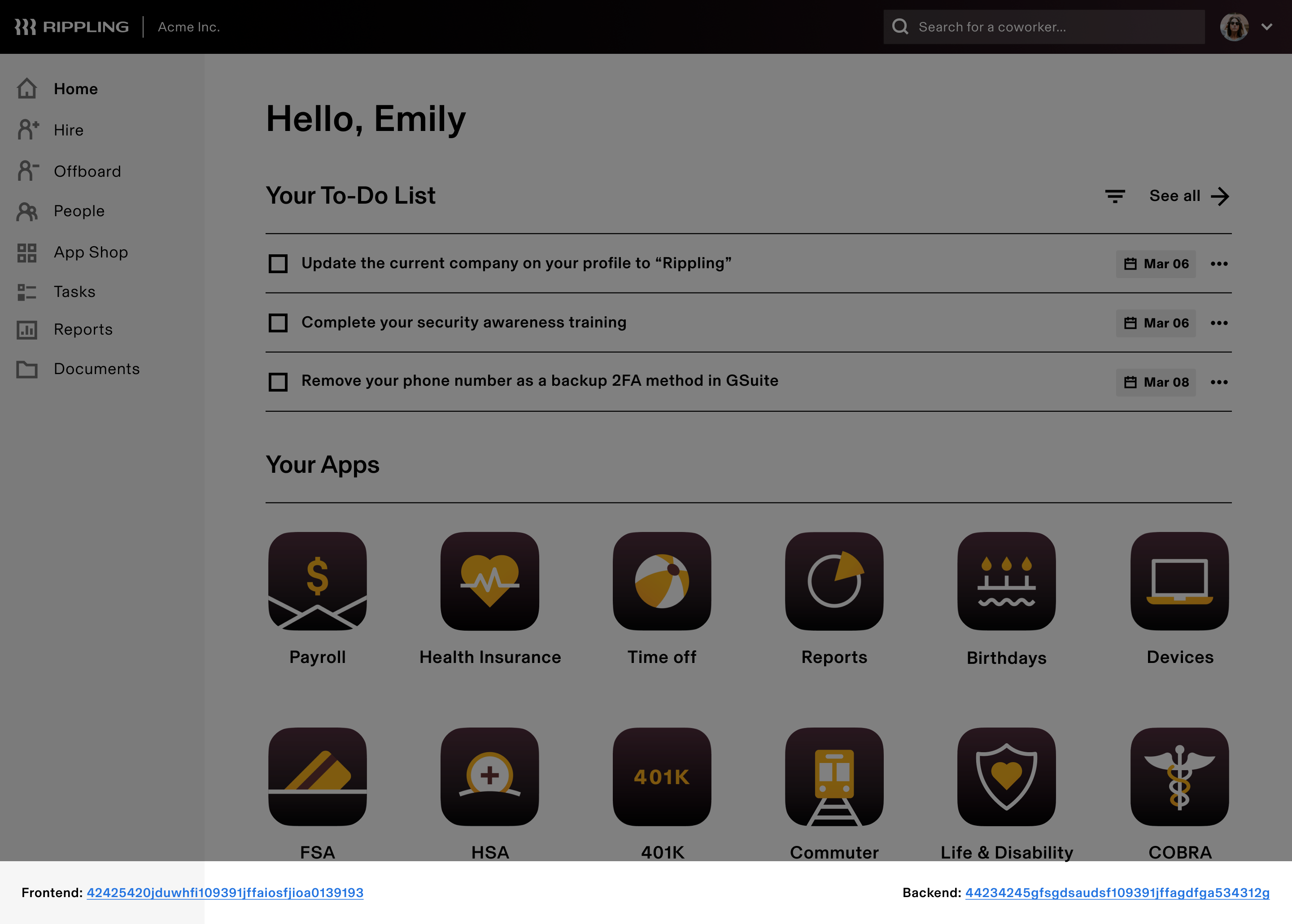

Lesson 8: Make the container say the code version

When developers deploy they immediately want to know two things: 1) was it successful and 2) is the app running my version of the code. So when a container is built, it is injected with the git commit sha. Here is the build command:

docker build -t rippling-main:latest --build-arg GIT_HASH=$(git rev-parse HEAD) --build-arg GIT_HEAD_EPOCH_TIME=$(git show -s --format="%ct" HEAD).

We show this value in the footer of our admin site so that developers always know where things stand.

Lesson 9: Ensure mandatory build-args are set during the build

In the previous example of passing the git commit hash as a build time argument, an issue occurred when the build args were missed while building the docker image.

ARG GIT_HASH

ENV GIT_HASH=$GIT_HASH

By default, the Docker build command does not enforce that all build arguments are passed. In the above example, even if GIT_HASH is not set, the build process will complete successfully. When it was deployed to production, the application crashed due to the missing environment variable.

As a solution, we always test if the build arg is set during the build time, so that the docker build command will fail when not passed.

RUN test -n $GIT_HASH

Lesson 10: Distribute traffic by queue size instead of round-robin

The default load balancing algorithm of AWS target group is round-robin. This sounds sane as long as the requests have uniform processing time. That’s not always true. When we have few requests that are slower than others, the round-robin algorithm will continue to assign requests to containers that are still processing slow requests, thus overloading them. This can lead to 502 errors from the container. We have switched to the option “Least outstanding requests” algorithm, that uses requests pending from containers as a metric to distribute the traffic. In the above diagram, the least outstanding requests would ensure the new request (R5) goes to the container that’s free i.e. Container 3.

To conclude

It’s been a few months since we containerized and we are happy with the results. Our deployments are highly available and smoother. Our developers can make changes to system libraries faster. They can replicate bugs in their machines reliably. The infra team has better abstractions to work with. It made it easier to launch new services and scale them. Our next step is decoupling the modules and moving to microservices architecture. More on that in a future post!