Scaling stories at Rippling: Fast Serializers, Part 2

This two-part series is a behind-the-scenes look at our journey scaling Rippling without sacrificing our execution speed. In part one we described how site performance had become unacceptably slow, particularly for our largest customers, and narrowed down the issue to a bottleneck in our serializers. We characterized all of the parameters that contributed to the bottleneck, now let’s dive into how we solved it.

Faster Read Serializers

Serializers are a bottleneck for our read path, so we decided to split up the read and write path and develop a much faster Read Serializer for our needs. We built the Read Serializer from scratch, i.e. not on top of Django Rest Framework (DRF) Serializers. We didn’t reinvent the wheel though, and made sure to delegate to DRF and existing library code where necessary.

Minimize transforming DB data while maintaining backwards compatibility

MongoEngine object instantiation is slow, and the final serialized output is pretty close to what the DB gives us, so our new Serializer tries to skip MongoEngine as much as possible.

- For fields, we skip MongoEngine entirely and use the PyMongo dictionary data directly—PyMongo is our DB driver and provides raw DB data as python dictionaries.

- Primitive fields don’t need any further conversion from what PyMongo gives us, they are already “JSON” and ready to be sent to the frontend.

- For non-primitive fields like dates, we delegate translation to DRF Fields. We did explore using non-DRF field serializers as there are much faster alternatives out there. In practice we found that this kind of translation did not account for a large enough proportion of read latency to warrant using a different framework and possibly breaking backwards compatibility.

Faster MongoEngine Document instantiation

We still needed to support serializing properties to be backwards compatible. We were forced to instantiate MongoEngine objects to compute the properties since they are defined on MongoEngine models. There are two approaches we could have taken here to support properties:

- Add dependency hints to properties so that we could decide ahead of time which fields we actually need from our DB. This is called “projecting” over the data. Less fields from the DB means less fields passed to MongoEngine, which means faster instantiation time.

- Make MongoEngine Document instantiation lazy. Defer the processing work that is done on each field in the constructor and only do that work when the field is accessed on the Document.

We tried both of these approaches in production and decided to go with the Lazy Document approach over Projections. Here are the tradeoffs:

- Lazy Documents have a higher overhead of requesting all data from the DB. In production this doesn’t matter for performance because the DB is not the bottleneck. In the future if we run into DB load issues that can’t be addressed by sharding or if DB queries become the critical path for reads, we can revisit using Projections.

- Projections are hard to adopt in our codebase because we have to manually annotate each property (there are thousands of them) with dependency hints. They are also brittle because if a developer changes some code that is used upstream by a property, they may not update the property’s dependencies, which can lead to data being lost and crashes in our backend.

Faster Read Serializer Design

Our final Read Serializer design addressed many of the root causes of slow serializer performance. We only used the abstractions that we needed and the result was a serializer that was faster, backwards compatible, and highly customizable for our application’s read path requirements.

Optimize permissions

We also did some due diligence around optimizing permissions and found two simple optimizations which made evaluating permissions twice as fast:

- Caching model field permission definitions in memory. It was taking a decent chunk of time to recompute which field permissions a model has for each object that we are serializing.

- Both read and write permissions were being evaluated during the read path. We refactored this to just evaluate read permissions on the read path.

For a majority of requests, this didn’t have a large impact because most requests are made by users with simple ACLs. But this did have a large performance impact for users like admins who have dense ACLs. In one benchmark we found that these two changes reduced latency of a very common API endpoint by 20% for admins.

Adoption and Results

Our RoleWithCompany endpoint provides basic employee data and powers every product at Rippling. It has 1.1 million hits per day and we were hearing from product teams that it was a bottleneck in loading several of their pages. We set a milestone to use the new Read Serializer for this endpoint as it would have the largest benefit out of the gate.

Experimentation and Rollout

Downtime for the RoleWithCompany endpoint was unacceptable, so we validated performance and correctness of the new serializer with an approach called “double reading.” For some small % of requests to the endpoint, we would run two serializations, time each one, compare the outputs, and log the resulting diff and latency metrics to datadog.

Our application is single threaded, so this meant that we were running experiment and control serializations one after the other. We considered using async workers so that our experiment would not block the request, but we decided against it as this would add complexity to the project and we weren’t being too disruptive to production traffic with our low sampling rate. We also set up failsafes so that the thread would never crash or time out because of our experiment.

Double Reading was incredibly valuable as we were able to prove early on that our new serializer was twice as fast. We were able to catch correctness issues and iterate on them using production data but without affecting production or causing downtime.

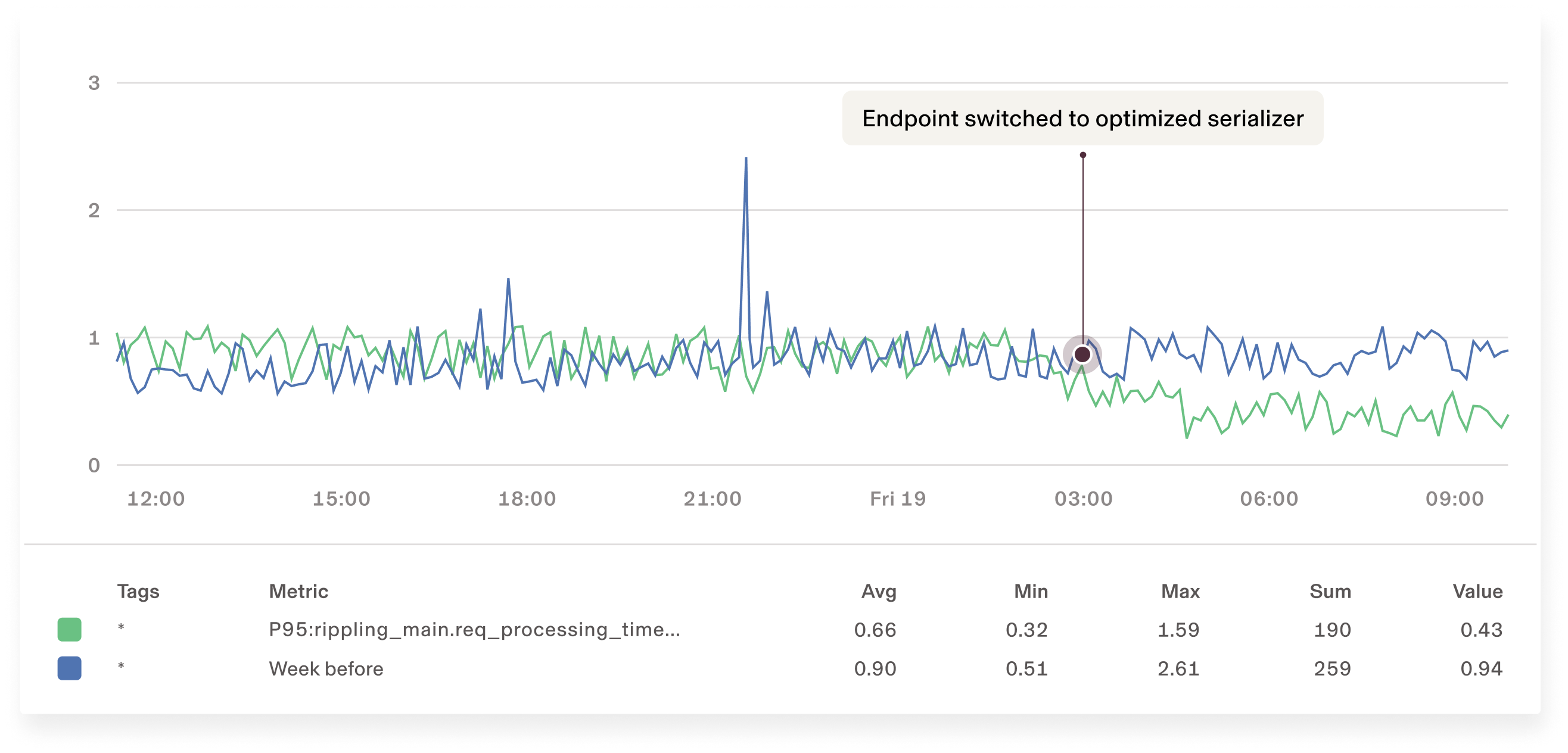

Once we rolled this out, we immediately saw a drop in the p95 latency for this endpoint.

The RoleWithCompany API endpoint is batched from the frontend and it suffers from long tail latency as a result: the slowest API calls in the batch are often the bottleneck to page loading. Lowering the P95 latency of this endpoint on the backend improved the long latency tail of this batched endpoint and directly improved page load time across the site.

Adoption

We successfully built a faster serializer, applied it to a critical endpoint, and saw faster page load times. Now it was time to make the new read serializers the standard across our codebase.

- We evangelized the new read serializer, getting teams to convert existing custom serializers to use the new framework by advertising 2–3x serialization boost that we had observed in various endpoints. The new serializers have a similar interface to DRF serializers to make this transition relatively easy.

- We wanted to use the new read serializer for endpoints that did not have a custom serializer and instead used the default serializer. To do this we added endpoints that did default serialization to a legacy list. We switched the default behavior to use the new read serializer for endpoints moving forward, excluding the legacy list. We worked to shrink the legacy list by going through the same double read experimentation framework that we used before, validating performance and backwards compatibility of the default serializer.

- For default serialization, we made a small change to exclude serializing properties by default unless explicitly overridden. This addresses the performance issue around expensive properties moving forward.

- We also are blocking new usages of the all fields anti-pattern in serializers to force developers to actively choose which fields they want to serialize to the frontend.

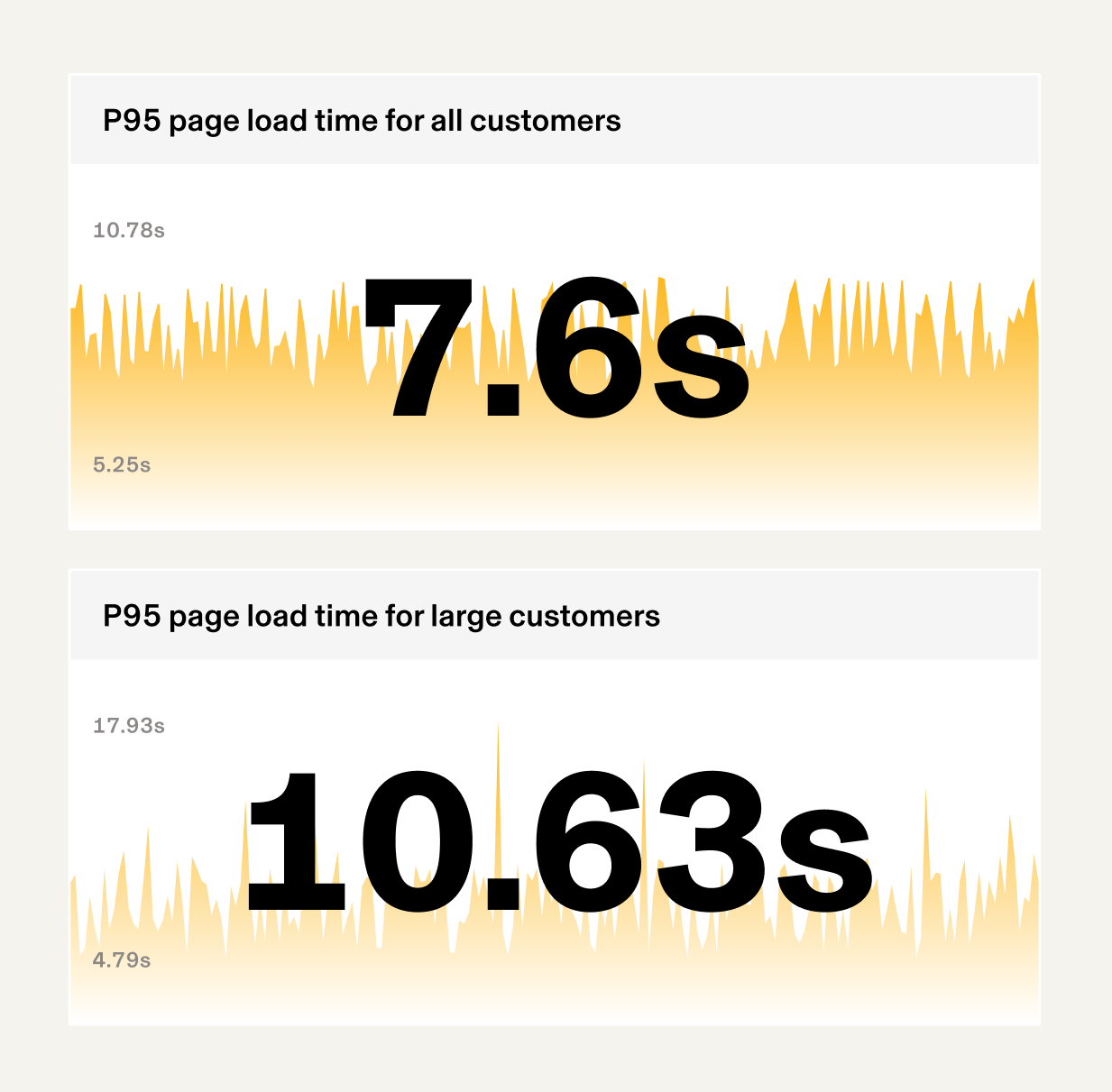

By splitting up the read and write path and speeding up the read path at the framework level, we got a significant improvement to our sitewide performance. We cut our P95 page load times by over half across our application, and ensured that our largest customers were able to use Rippling seamlessly. The improvements are not solely attributed to faster serializers, but they played a big part.

Summary

Takeaways:

- Framework choices are important. They should be made in a way to maximize developer velocity without enabling easy performance mistakes that can avalanche into sitewide performance regressions.

- Data-driven investigation and development is critical to solve complex performance problems. In a large application, developer intuition alone isn’t enough to solve sitewide slow-downs. By starting with a metric and goal, e.g. “we want to get p95 page load time < 4 seconds for all of our pages,” and working backwards from that goal, we were able to make real progress towards our goal.

- Careful experimentation and granular observability can make large-scale rollouts safe and seamless.

Looking ahead:

- We’ve controlled our sitewide performance issues in recent months, but we’re not done yet. Now that we have broad new serializer adoption in our codebase, any future optimizations we make to the read serializer will improve our sitewide performance.

- You may have noticed that the serializer improvements don’t resolve one fundamental scaling problem. Even though the new serializers make API responses faster, if we are loading O(n) company data to the frontend our site will be slower for larger companies. This is another problem that we’ve already started tackling with cursor-based pagination.

If you love solving similar problems, we're hiring! Click here to learn more.